Why AI matters to you as a responsible investor

Artificial Intelligence (AI) is transforming how businesses operate — and how we live. It is everywhere, from personalised product recommendations to disease diagnosis. But with great power comes responsibility. Beyond creating new business models, AI can disrupt jobs and industries and raise serious environmental and ethical concerns. AI will be a significant part of future economic growth, but it must be developed responsibly to avoid long-term risks — both for the society at large, and your portfolio.

Fast Growth, Fast Adoption

AI adoption has been swift: over 75% of companies use AI, and OpenAI reported over 500 million weekly users in early 2025 according to CNBC. Governments are pouring money to fuel its growth – e.g. the US’s $500 billion Stargate Initiative and Europe’s €200 billion AI plan. Meanwhile, tech giants like Microsoft, Amazon, and Nvidia are investing heavily in AI servers and infrastructure.

AI for Good

AI is not only a driver of financial growth—it is also becoming a key tool to tackle some of the world’s biggest environmental and social challenges.

On the one hand, AI provides solutions addressing environmental sustainability, by managing energy grids more efficiently, reducing waste, and forecasting pollution. It can provide farmers with adaptative strategies based on climate, soil, and crop data to enhance yield stability. It can also increase the reliability of clean energy like solar and wind, by predicting the availability of power.

On the second hand, AI can support social challenges with its applications in healthcare (disease detection, personalised treatments, drug discovery), education (personalised learning platforms, real-time feedback), and financial inclusion (alternative credit scoring) – thereby supporting the UN Sustainable Development Goals (UN SDGs) in may use cases.

The Bad, and the Ugly?

AI is changing the world by improving supply chains and customer service, and driving innovation. But its rapid rise also brings major environmental and social challenges that, if not managed, can develop into financial risks.

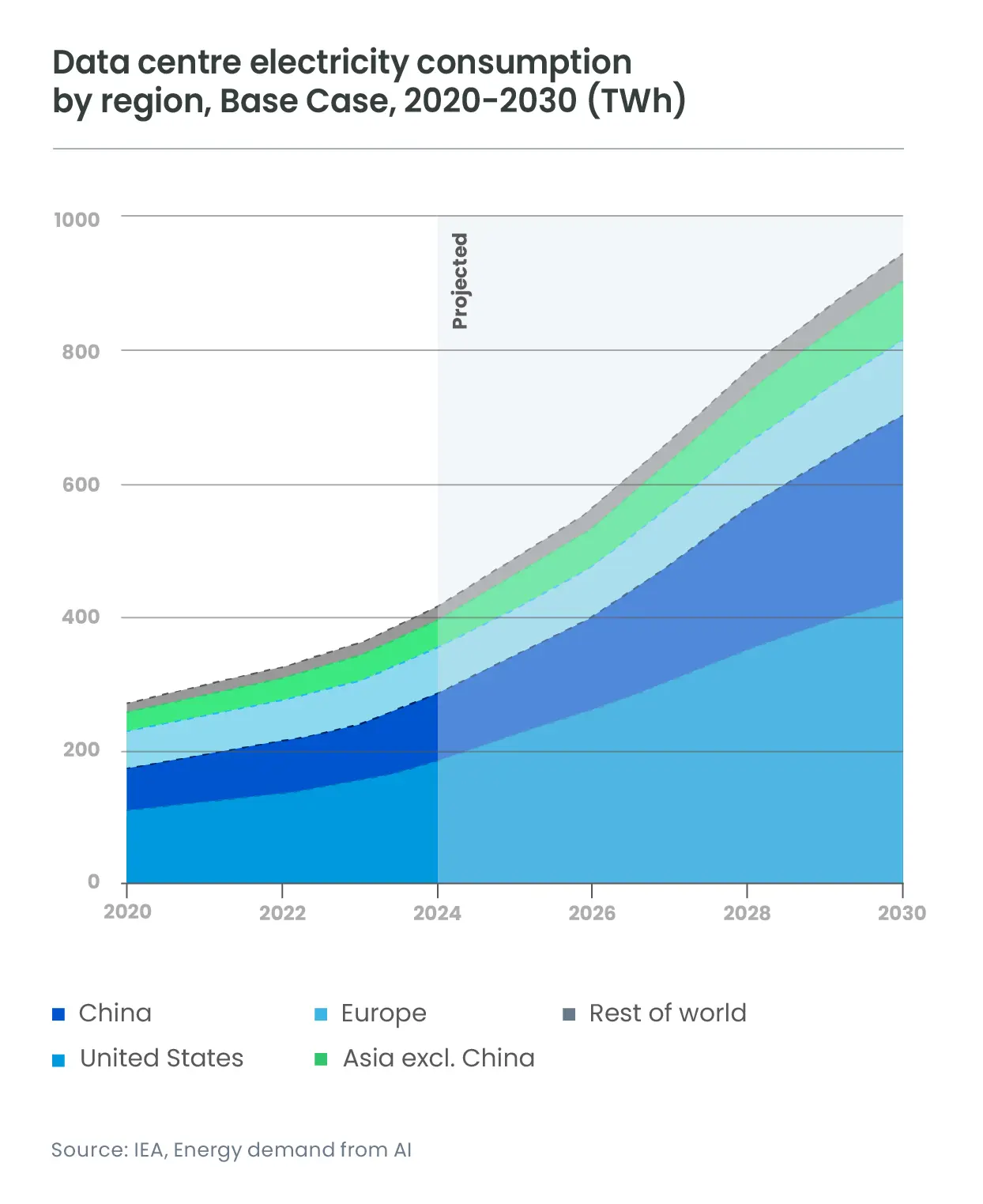

Training large AI models requires enormous electricity and data centers could more than double their electricity demand within five years – with impacts on costs and carbon emissions. Companies that ignore this may face backlash or regulatory penalties.

Water use is also a critical concern earlier in the AI value chain, with the mining and manufacturing pertaining to the production of IT hardware that houses AI software - chip factories, for example, use a lot of ultra-pure water. The potential water shortages that AI can thus contribute to harm ecosystems but also create risks for production, leading to higher costs for companies. The realisation of AI-related sustainability risks impacts both portfolio value and the environment.

On the social side, one of the big challenges is job displacement. AI is expected to automate many jobs (customer service for example) - 40% of jobs worldwide could be affected. Inequality could worsen in the lack of reskilling and workforce transition programs.

Additionally, the geopolitical landscape is becoming increasingly fragmented as countries compete fiercely for AI dominance.

Regulation and Governance

The regulatory landscape for AI is evolving quickly as policymakers seek to address ESG risks and the broader technology implications. But while the EU is taking the lead with the AI Act, US regulation remains fragmented, lacking a unified federal framework.

With regulation struggling to keep up with the pace of AI innovation, industry-led efforts play a key role in defining responsible AI practices [1]. While various global frameworks and initiatives (OECD, UN…) promote AI development that supports sustainability, human rights, and accountability, a growing number of companies now recognise that ethical AI is both a governance imperative and a competitive advantage. Companies that embed ethical safeguards, conduct independent audits, and implement clear governance structures will be better equipped to drive sustainable growth.

Building an ESG Approach to AI

AI presents a unique blend of opportunities and risks that have profound implications for investors. An ESG analytical framework can be a valuable tool, offering a structured approach to assessing how companies manage these AI-related risks. This framework should be tailored and flexible, given the diversity of AI applications and companies’ types of exposure to AI. Beyond looking at whether a company is using AI, investors must focus on how it is doing so, and which governance structures are in place to manage potential risks. This means analysing companies’ adoption of responsible AI principles and their transparency in disclosing human rights risk assessments – as well as how these principles translate into action, in decision-making, supply chains, and customer interactions.

Shareholder engagement is a powerful lever for investors. Not only does it support more informed investment decisions. By entering active dialogue with companies, supporting collaborative initiatives and exercising voting activities, investors can also encourage greater transparency around AI-related risks and safeguards, gain clarity on governance practices where public disclosures fall short, and foster the development of more responsible AI.

AI for a Better World?

An ESG lens is a powerful tool for assessing the risks and opportunities generated by the AI revolution. As stewards of capital, investors have a critical role to play in shaping AI’s trajectory by actively engaging with companies to promote responsible AI practices and mitigate the environmental and social challenges that AI poses. Moreover, collaboration with policymakers, industry leaders, and civil society is necessary to create a more transparent, accountable, and sustainable AI ecosystem.

By focusing their efforts through the ESG lens, investors can ensure that AI’s potential is harnessed for the greater good, driving long-term value and contributing to a more sustainable world.

[1] Responsible AI refers to the development and use of artificial intelligence that is ethical, transparent, fair, and accountable prioritising user privacy, minimising bias, and preventing harmful impacts